New Delhi: The Union Government has taken a tough stance against the rising menace of AI-generated videos and deepfake content, introducing stringent new rules that significantly tighten accountability for social media platforms.

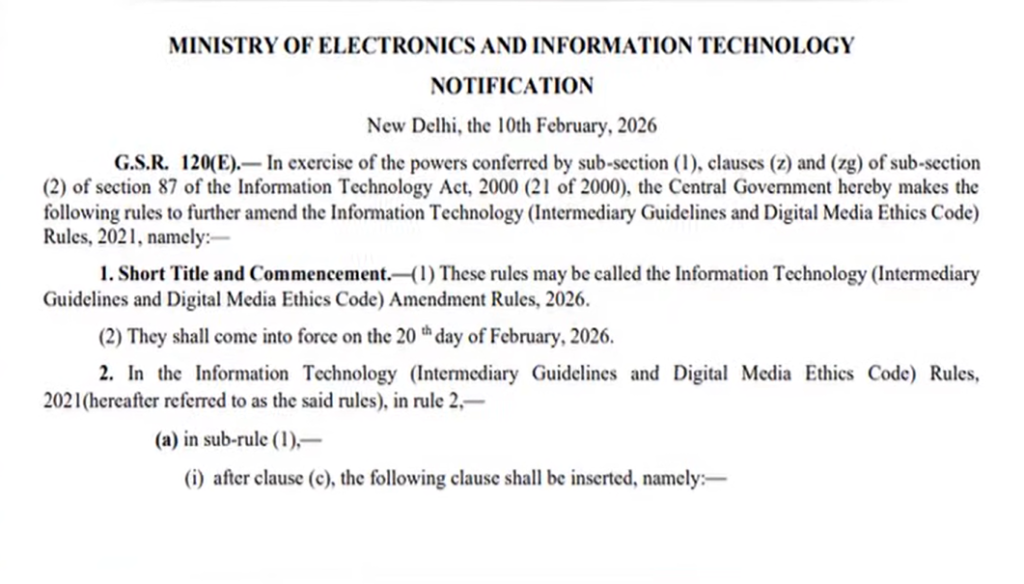

Under the newly notified Information Technology Rules, social media companies will now be required to remove AI-generated, deepfake or synthetic content within three hours of receiving a takedown order from the government or a court. Earlier, platforms had up to 36 hours to comply.

The new rules also make it mandatory for social media platforms to clearly label AI-generated content. Any post created using artificial intelligence must be prominently marked as “AI-generated content”, ensuring users are not misled.

Failure to comply with these provisions will invite strict regulatory action against the concerned platforms.

The government has further directed companies to actively flag and prevent the circulation of illegal, false or fabricated material, including content related to child exploitation, impersonation, deepfakes, synthetic media, explosive material or misleading documents.

Officials said the revised rules aim to curb the rapid spread of harmful digital content, which often goes viral long before corrective action is taken. The new IT Rules will come into effect from February 20.

Reacting to the decision, individuals affected by deepfake misuse have welcomed the move, calling it long overdue. Several victims have highlighted how AI-generated videos using fake voices and manipulated visuals have been used to promote fraudulent schemes, misleading investments and fake medical advertisements.

Experts believe the three-hour takedown mandate could significantly reduce public harm, financial fraud and reputational damage, marking a decisive shift in India’s digital governance framework.